A guide to thriving in L&D in 2026

The role of L&D in 2026 is not about managing courses. It's about building resilient, adaptable, and human-centered workforces that thrive alongside intelligent technology. Our moment is now.

The future is not approaching. It already turned up, grabbed a chair, and started changing our meetings. AI is now in the room for every project. For L&D, that is not a problem to manage. This article is also available in a simple one-page visual here.

The emphasis is moving away from simply creating content towards a more strategic role of enabling human performance and driving behavioral change. L&D’s job is no longer to be a factory for learning materials, but to be architects of change, using AI to deliver support precisely when and where it's needed.

The goal is simple. Build a smarter normal. One where L&D drives measurable outcomes, not just completions.

From content factory to performance partner

If the question is “what should people learn,” we will keep shipping modules. If the question is “what helps them perform better today,” we start building tools that live where work happens. Think support agents who can ask a verified SOP copilot inside the help desk and personalized micro-learnings for their skill gaps delivered right inside the performance management tool showing their top skill gaps impacting performance.

Here’s how L&D teams should spend their time in 2026: the strategic allocation of resources is shifting the majority of time into enabling performance, not creating and delivering courses.

The human advantage is real, but let’s be precise

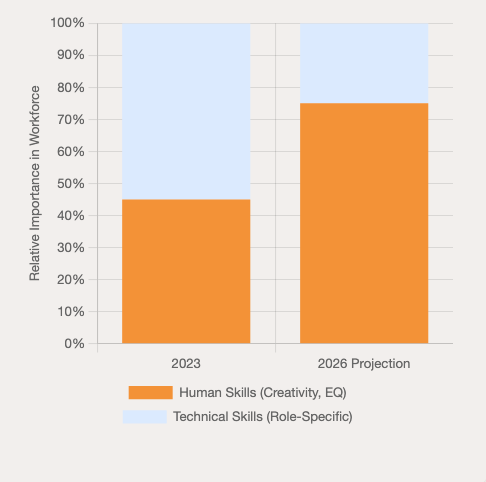

Yes, human skills will decide who thrives. Human skills, as market data shows, are in rising demand. L&D must champion them, building them through simulations, structured feedback, and stretch projects. While AI automates technical tasks, the skills that make us uniquely human: creative thinking, critical thinking, resilience, and communication become the key differentiators. Think how you’ll build this. Think short simulations with branching outcomes. Peer coaching with prompts that force reflection. Stretch projects with real deliverables.

Score behaviors, not completions. Can this person frame a problem, test a path, explain a trade-off, and bring people with them? When those behaviors improve, decision quality and handoffs improve too. Still need convincing? Look at this chart:

Start with your own house

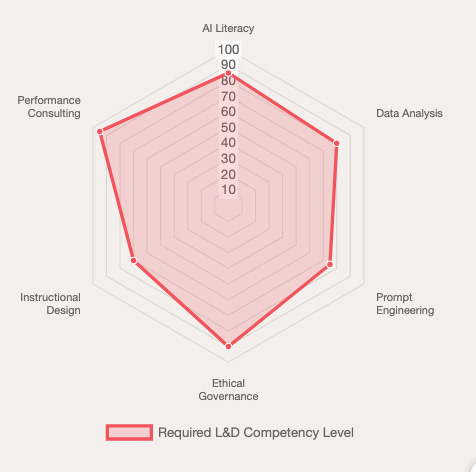

No team should ask the business to adopt AI if L&D cannot use it responsibly itself. I make AI literacy table stakes for my team: mastering prompts, data basics, bias checks and when not to use AI at all. We agree on simple use cases proficiency and track progression of the team and incremental gains in reducing manual work. Credibility follows when you back it up with actions and facts. Impactful L&D teams must lead by example, developing fluency in AI literacy, prompt engineering, data analysis, and the ethical evaluation of AI tools. This chart shows the key competency areas modern L&D teams must master:

Critical thinking is now a safety feature

The volume of AI-generated content is about to drown us. Speed will tempt people to act on the first reasonable answer. Copilots like Gemini and ChatGPT show sources. High-risk moves require a human checkpoint. Think verification before action on AI suggestions. L&D must teach employees to analyze, challenge, verify and contextualize information. Fewer “we should not have done that” moments.

Personalization that earns its keep

Personalization in L&D sounds magic. It is useful when the design is solid. Let AI route, not replace instructional craft. Start from the real task and the mistakes people actually make. Give tight, timely feedback on each attempt. Trigger help in the flow of work when someone struggles, not two weeks or months later in a “performance improvement workshop”.

Trust by design, not by press release

People will not use AI-supported learning if they do not trust it. Keep governance human and practical. One page that anyone can read. What data we use. What we never collect. Where a human sits in the loop for higher-risk content. How we test for bias and safety before launch. How we log and resolve incidents. Review that risk log monthly, not annually. Leaders sleep better. So do learners.

Inclusion as default, not as an initiative

AI can remove barriers if we point it at the right problems. Live captions and translation that actually work. Reading-level transforms and alternative formats on everything we ship. Voice control and transcripts for all videos. However, it is crucial to apply guardrails, such as a requirement for human-review of all high-stakes content and a protocol to monitor for potential bias in AI-generated translations. The goal is simple: more people can learn, faster.

Treat learning like a product

Courses age like produce, not like wine. Every asset needs an owner, a review date, and telemetry. Retire content that no longer drives outcomes. Measure everything from first view to on-the-job impact. A smaller, fresher micro-learnings library beats a giant museum of dead long courses that help no one. Treat learning objects as products with lifecycle owners.

Measure what matters

Not everything. The few things that tell the story. Time-to-proficiency by role. And by skill. Yeah, ever done that? Internal mobility percent with active pathways. Content retirement rate and the percent of assets with an owner and a KPI. And percent of courses that have successfully improved KPIs. Just that.

Here is the real punchline. L&D stops arguing for relevance when we make work easier and safer today. We do not need permission to start. We need a clear KPI, a small pilot in the flow of work, a lightweight governance pack, and the discipline to retire what no longer serves.

Bonus

An AI Acceptable Use and Data Policy for Learning and Development

Key Principles:

Human first, AI assists: People remain accountable for outcomes and decisions.

Transparency: learners know when and how AI is used.

Privacy by default: collect the minimum data needed for the task.

Security by default: protect data in transit and at rest.

Inclusion by design: accessibility is built in from the start.

Quality and fairness: test for accuracy and bias before launch and during use.

Compliance: follow GDPR, local laws, company policies, and recognized frameworks such as NIST AI RMF and guidance aligned to the EU AI Act.

About me

I’m a leadership development consultant, certified coach, and digital transformation strategist with 18 years of experience leading people, customer operations, L&D and organizational change. I specialize in guiding leaders through complex transformations in the age of AI, with expertise in change management, leadership development, and workplace culture. As the author of Atomic Leadership on Substack, I share actionable insights that empower leaders, teams, and organizations to thrive in fast-changing environments. Follow me on LinkedIn or Connect.